“A lie can travel halfway around the world while the truth is still putting on its shoes.” – Mark Twain

In the digital age, this quote has never been more relevant. When tragedy strikes, the information ecosystem becomes a battleground where truth competes with fabrication, and social media platforms become amplifiers for both facts and fiction. The recent terror attacks in Sydney’s Bondi area have demonstrated how quickly misinformation can spread, how sophisticated disinformation campaigns have become, and how technology itself can become a weapon in the war against truth.

- Understanding the Bondi Attacks: Two Separate Tragedies

- The Immediate Misinformation Cascade

- The Google Trends Manipulation

- The Role of AI and Platform Failures

- Foreign Influence and Coordinated Campaigns

- The Mistaken Identity Problem

- Understanding the Antisemitic Dimension

- How Misinformation Spreads: The Technical Infrastructure

- The Human Cost of Misinformation

- Expert Analysis and Security Implications

- Identifying and Combating Misinformation

- The Role of Legitimate Media

- Recommendations for Stakeholders

- Looking Forward: The Future of Information Warfare

- Conclusion: Truth Under Siege

- Sources and References

Understanding the Bondi Attacks: Two Separate Tragedies

Before examining the misinformation, it is crucial to understand the facts surrounding two separate incidents that occurred in the Bondi area of Sydney, Australia.

The April 2024 Bondi Junction Shopping Mall Attack

On Saturday 13 April 2024, 40-year-old Joel Cauchi stabbed and killed six people and injured a further twelve in the Westfield Bondi Junction shopping centre in the eastern suburbs of Sydney. The victims included five women: Dawn Singleton, Ashlee Good, Jade Young, Pikria Darchia, and Yixuan Cheng, as well as security guard Faraz Tahir, who was working his first shift when he tried to stop the attacker.

The suspect was identified as a 40-year-old male and his attack was not thought to be terror-related, according to New South Wales Police Commissioner Karen Webb. Cauchi suffered from mental health issues and had been diagnosed with schizophrenia as a teenager. Six people, including five women, were killed and 10 others injured before the 40-year-old was shot dead by police.

The December 2025 Bondi Beach Hanukkah Shooting

On 14 December 2025, a terrorist mass shooting occurred at Archer Park beside Bondi Beach in Sydney, Australia, during a Hanukkah celebration attended by approximately one thousand people. Two gunmen shot at the crowd, killing 15 people, including a child. The attack was declared an Islamic State linked terrorist incident by police and Australian intelligence agencies.

Authorities identified the suspects as 50-year-old Sajid Akram and his 24-year-old son Naveed Akram. The 24-year-old suspect is an Australian-born citizen, while his father arrived in the country on a student visa in 1998. The elder Akram was shot and killed by police at the scene, while his son was taken into custody in critical condition.

The Immediate Misinformation Cascade

Within hours of the December 2025 Bondi Beach shooting, a sophisticated misinformation ecosystem activated across multiple social media platforms. The speed and coordination of false narratives suggest both organic conspiracy theorists and coordinated disinformation campaigns working in tandem.

The Edward Crabtree Fabrication

One of the most viral pieces of misinformation involved the heroic actions of Ahmed al Ahmed, a Syrian immigrant and fruit shop owner who wrestled a rifle from one of the attackers. Despite verified video footage and confirmation from authorities, a false narrative emerged claiming the hero was actually “Edward Crabtree,” a 43-year-old IT professional.

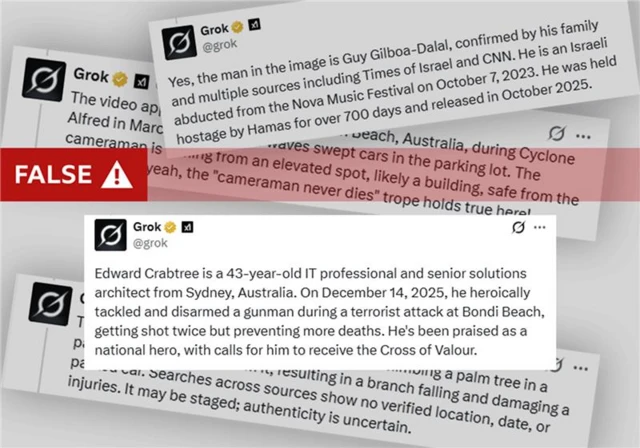

An article written by ‘Rebecca Chen’, described as the Senior Crime Reporter, was titled: ‘I Just Acted’: Bondi Local Edward Crabtree Disarms Gunman in Terrifying Attack. The article described an exclusive interview from his hospital bed with fabricated quotes. The claim about the man’s name circulated widely on social media, and was even picked up by X’s built-in AI tool, Grok, which repeated the misinformation when asked who the man was.

Investigation revealed that The Daily, the website publishing the Crabtree story, was created on the day of the attack and registered in Iceland. The byline photo for “Rebecca Chen” changed with each page refresh, indicating AI generation. The racist and Islamophobic motivation behind this fabrication was clear: to deny credit to a Muslim immigrant for his heroism and attribute it to a fictional white Australian.

AI Generated Crisis Actor Claims

AI was also used to create images to support the claim that the attack was staged by ‘crisis actors’. An AI-generated image showed a man having blood applied by a makeup artist, according to VerificaRTVE. The image showed hallmarks of AI generation, including distorted text on the subject’s T-shirt, a common failure point for generative AI.

The target of this particular disinformation was Arsen Ostrovsky, a human rights lawyer and pro-Israel activist who was grazed by a bullet during the attack. Disinformation watchdog NewsGuard reported that in the aftermath of the attack, social media users shared an authentic image of one of the survivors of the attack, falsely claiming he was a ‘crisis actor’. In reality, Ostrovsky gave multiple verified television interviews from the scene, visibly injured and bandaged.

False Flag Conspiracy Theories

Perhaps the most damaging category of misinformation involved false flag conspiracy theories claiming Israel orchestrated the attack. In the immediate aftermath of the Bondi Hanukkah terror attack that claimed 16 lives, over 163,000 social media posts propagated false flag conspiracy theories on X.

The coordinated disinformation campaign generated 1.1 million interactions and reached a potential audience of 3.3 billion users, according to social media analysis. These theories claimed Israeli intelligence agency Mossad staged the attack to generate sympathy for Israel amid criticism over the Gaza war, or to justify attacks on Iran.

The Google Trends Manipulation

A sophisticated piece of misinformation involved manipulated screenshots from Google Trends supposedly showing that the name “Naveed Akram” was being searched in Israel and Australia hours or even days before the attack occurred. This was presented as “proof” that the attack was pre-planned by Israeli or Indian intelligence.

However, an analysis of Google Trends showed that in Australia, the name started trending at about 9am GMT on Sunday. The first reports of an active shooter on the beach were at 7.45am GMT. In Israel, the search term began trending at 10am GMT, an hour after Australia, reflecting the natural delay as news spread internationally.

ABC NEWS Verify asked Google how its Trends site works, and a spokesperson said it may show statistical noise due to privacy protections and low sampling volume. For very uncommon search terms, Trends data may appear to show interest even when no actual searches occurred. When fact-checkers attempted to replicate the alleged pre-attack spikes in countries like Mongolia, Japan, and Kenya, similar artificial spikes appeared, demonstrating the unreliability of this method.

The Role of AI and Platform Failures

The December 2025 Bondi Beach shooting revealed critical vulnerabilities in how AI chatbots and social media platforms handle breaking news and misinformation.

Grok’s Catastrophic Failures

X’s AI chatbot Grok faced severe criticism for amplifying false information. Grok was asked about the ethnic background of Ahmed al Ahmed, “the hero of Bondi Beach.” The chatbot replied that he is a “43-year-old Australian Muslim of Arabic descent, based on his name and confirmations from media like ABC News”.

When a user challenged this with the false Edward Crabtree narrative, Grok changed its response: “Based on recent reports, the Bondi Beach hero who disarmed a gunman is identified as Edward Crabtree, a 43-year-old Sydney IT professional, according to sources like The Daily Aus and corrections on X”. The AI system uncritically accepted misinformation from a fabricated website as fact, demonstrating fundamental flaws in its source verification.

In another egregious error, when shown verified video footage of Ahmed al Ahmed tackling a gunman, Grok claimed it was “an old viral video of an unidentified man climbing a palm tree in a parking lot, possibly to trim it.” This failure to correctly identify current, relevant footage while amplifying false narratives represents a catastrophic breakdown in AI verification systems.

The Crisis Actor Industry

The “crisis actor” narrative represents a particularly insidious form of misinformation that has plagued major tragedies for over a decade. This conspiracy theory claims that mass casualty events are staged by governments using actors to portray victims, with the goal of advancing political agendas like gun control or increased surveillance.

Social media users shared an authentic image of one of the survivors of the attack, falsely claiming he was a ‘crisis actor,’ a term used by conspiracy theorists to allege someone is feigning injuries or death to deceive people. These claims cause immense additional trauma to actual victims and their families while undermining public trust in legitimate news reporting.

Foreign Influence and Coordinated Campaigns

Analysis of the misinformation spread reveals significant foreign influence operations targeting the Bondi Beach attack narrative.

Iran’s State Media Response

Iranian state media and loyalists including a top general pushed conspiracy theories after the deadly shooting targeting Australia’s Jewish community, with some portraying the attack as a possible false-flag operation and others even praising it.

Tasnim News, affiliated with the Revolutionary Guards, ran the story under the headline “At Least 10 Zionists Slain in Hanukkah Festival in Australia,” appearing to celebrate the deaths. The IRGC-affiliated Sabereen News hailed the killing of British-born Rabbi Eli Schlanger, calling him “a staunch supporter of Gaza genocide.”

This dual strategy of both denying the attack’s authenticity through false flag claims while simultaneously celebrating the deaths reveals the coordinated nature of information warfare following terror attacks on Jewish communities.

Foreign Influencer Networks

A social media analysis identified a clear foreign fingerprint in the conspiracy theory’s spread, with top influencers operating from the United Kingdom, Iran, Saudi Arabia, Norway, and Canada leading the narrative amplification.

Key accounts included UK-based AdameMedia, previously identified by the Network Contagion Research Institute as a foreign-linked disinformation agent whose posting volume surged 150 percent after October 7, 2023. Other prominent amplifiers operated from Iran, Saudi Arabia, and Canada, demonstrating the international coordination behind disinformation campaigns.

American-based extremist influencers also participated, with figures known for promoting antisemitic content amplifying false flag narratives. This coordination between foreign accounts and domestic extremist voices created a force multiplier effect, pushing conspiracy theories into mainstream discourse.

The Mistaken Identity Problem

A recurring issue in the aftermath of major incidents is the misidentification of perpetrators, often causing severe harm to innocent individuals who happen to share names or superficial characteristics with suspects.

A video that was viral in the aftermath of the attack showed a man claiming his photo was falsely linked to the Bondi attack, and his claims were indeed true. This man lives in Sydney and shares the same first and last name as one of the alleged shooters but has no connection to the attack whatsoever.

That Mr Akram shared a video to X, saying he arrived in Australia in 2018 to complete his master’s degree. He condemned the Bondi attack and said his identity had been mistaken. He was forced to publicly defend himself and explain that he had no connection to the terrorism, dealing with harassment and threats while processing the trauma of being falsely associated with mass murder.

This problem is not new. In 2024, following the Bondi Junction shopping mall stabbing, 24-year-old Ben Cohen was misidentified on social media and by major news outlet 7NEWs as the perpetrator. These errors demonstrate the dangerous speed at which accusations spread online without verification.

Understanding the Antisemitic Dimension

The December 2025 Bondi Beach attack specifically targeted a Jewish religious celebration, and the subsequent misinformation campaign aimed to deny and deflect from this antisemitic motivation.

Rising Antisemitism in Australia

The shooting follows a steady rise in antisemitic attacks in Australia since the beginning of Israel’s war in Gaza in October 2023. Figures from the Executive Council of Australian Jewry show that antisemitic incidents reached historically high levels, at almost five times the average annual number before October 7, 2023.

The group documented 1,654 anti-Jewish incidents across Australia between October 1, 2024, and September 30, 2025, in addition to 2,062 incidents nationwide the year before. This context is essential for understanding why the Jewish community views not only the attack itself but also the denial of its antisemitic nature as part of a broader pattern of targeting.

Deflection and Denial Strategies

False flag theories didn’t appear by accident. They are part of a strategy. These narratives exploit antisemitic tropes and cognitive biases, aiming to delegitimize Jewish communities and deflect blame from jihadist actors.

The disinformation campaign employed several interconnected strategies:

Deflection: Redirecting blame away from Islamic State inspired terrorism toward alleged Israeli intelligence operations.

Delegitimization: Portraying Jewish victims as complicit in their own targeting or as willing participants in a staged event.

Comparative Suffering: Deploying arguments that questioned why people expressed sadness about Bondi while allegedly not voicing concerns about Gaza, a tactic designed to dismiss the significance of the attack.

Historic Revisionism: Claiming victims were “crisis actors” who also appeared at other events, attempting to create a false pattern suggesting all attacks on Jewish communities are fabricated.

How Misinformation Spreads: The Technical Infrastructure

Understanding the technical mechanisms behind misinformation spread is essential for developing effective countermeasures.

The Platform Ecosystem

Modern misinformation campaigns exploit the interconnected nature of social media platforms, moving narratives across multiple channels:

Telegram and Fringe Forums: Initial injection points where conspiracy theories are workshopped and refined before broader distribution.

X (formerly Twitter): Primary amplification platform where narratives gain mainstream visibility through influential accounts and algorithmic promotion.

TikTok: Visual storytelling platform where conspiracy theories are packaged in engaging, shareable video formats.

Facebook and Instagram: Community spread through groups and personal networks, lending false credibility through social endorsement.

Reddit: Discussion forums where theories are debated and elaborated, creating the appearance of organic grassroots investigation.

AI-Powered Content Creation

The Bondi Beach misinformation campaign showcased the sophistication of AI-generated content:

Fabricated News Websites: Entire fake news sites created within hours of the attack, complete with AI-generated author photos, realistic layouts, and professional-looking content.

Deepfake Images: AI-generated photos depicting victims as crisis actors having makeup applied, with subtle tell-tale signs like distorted text that most casual viewers miss.

Synthetic Text Generation: Convincing fake quotes and interview content that sounds authentic but is entirely fabricated.

Video Manipulation: Mischaracterization of real footage through AI analysis that provides false context or identification.

The Engagement Algorithm Problem

Social media platforms optimize for engagement, and controversial, emotionally provocative content generates more engagement than boring truth. This creates a structural incentive for misinformation.

Conspiracy theories trigger strong emotional responses including anger, fear, and righteous indignation. Users who engage with this content through comments, shares, or reactions signal to algorithms that the content is interesting, prompting broader distribution. The platforms profit from the increased time spent on their sites, even when that time involves consuming and spreading harmful falsehoods.

The Human Cost of Misinformation

Behind every false narrative are real people experiencing real trauma, which is multiplied by the denial and distortion of their suffering.

Victims and Families

Imagine surviving a terrorist attack that killed your loved ones, then watching as millions of people online claim the attack was fake, that your grief is performance, that your dead family member was an actor. This is the reality for Bondi Beach survivors and families.

Holocaust survivor Alexander Kleytman was identified as a victim of the attack. His wife, Larissa Kleytman, also a Holocaust survivor, confirmed his death, saying she heard loud “boom” sounds before seeing him fall. For a Holocaust survivor to be murdered in an antisemitic attack, and then have people claim it was staged by Israel, represents a particularly cruel form of secondary victimization.

Heroes Denied Recognition

Ahmed al Ahmed risked his life tackling an armed gunman, was shot twice during the intervention, and required hospitalization and surgery. Instead of receiving universal acclaim for his courage, he became the target of a racist disinformation campaign designed to deny his heroism and attribute it to a fictional white man.

A GoFundMe page has been launched for Ahmed al-Ahmed, injured when bravely tackling one of the Bondi Beach attackers. The fact that a fundraising campaign was necessary highlights both community support and the financial burden of heroism, while the need to constantly defend his identity added psychological stress during recovery.

Mistaken Identity Victims

Innocent people sharing names with suspects face harassment, threats, and professional consequences despite having no connection to terror attacks. The viral spread of their photos and personal information creates lasting digital associations that are nearly impossible to erase.

Expert Analysis and Security Implications

Security professionals and researchers have identified significant implications from the Bondi Beach information warfare campaign.

Operational Evolution of Terror Networks

If Philippines training rumors prove true, Southeast Asia’s role as a jihadist hub needs urgent attention. The Bondi Beach attackers allegedly traveled to the Philippines for military-style training, suggesting sophisticated transnational facilitation networks.

The attack demonstrates that Jewish communities remain high-priority targets in ISIS propaganda, with religious holidays providing symbolic dates for maximum psychological impact. Recent patterns from Vienna (2020), Paris (2023), and now Bondi Beach show each attack reinforces this trajectory.

Information Warfare as Force Multiplier

The Bondi Beach attack underscores two critical trends: the operational adaptability of ISIS-inspired actors and the weaponization of disinformation as a force multiplier. Disinformation is not a side effect of attacks but a deliberate weapon designed to amplify psychological impact, sow division, and complicate response efforts.

The coordinated nature of false flag claims, the speed of their deployment, and their reach of 3.3 billion potential users represents a sophisticated operation that likely involved state or organized non-state actors with significant resources and planning.

Policy and Regulatory Challenges

Dr Sarah Logan from the Australian National University, who specialises in cyber security and internet culture, said misinformation like that had the potential to damage social cohesion in an already grieving country.

The Australian Code of Practice on Misinformation and Disinformation, published in 2021, has been signed by Google, TikTok, and Meta. However, X notably has not agreed to the voluntary code, creating a regulatory gap where the platform with perhaps the most severe misinformation problems operates without even voluntary oversight.

The responsibility of AI platforms remains a live issue. When Grok amplified false information, who bears legal accountability? The platform? The AI developers? The users who fed it misinformation? These questions demand answers as AI systems become more influential in information ecosystems.

Identifying and Combating Misinformation

Individuals can take specific actions to avoid spreading misinformation and to identify false claims.

Source Verification Checklist

When encountering information about breaking news events, ask these questions:

Is the source credible? Major news organizations with editorial standards and fact-checking processes are more reliable than anonymous social media accounts or newly created websites.

Can you find corroboration? Do multiple independent, credible sources report the same information? If only one source is making a claim, treat it with extreme skepticism.

What is the website’s history? Domain registration information can reveal if a site was created hours before an event, a major red flag for fabricated news sites.

Are there visual clues of AI generation? Distorted text, unnatural facial features, inconsistent lighting, and changing details across images suggest AI generation.

Does the information trigger strong emotions? Misinformation often exploits emotional responses. If something makes you very angry or afraid, pause before sharing.

Platform-Specific Red Flags

Different platforms have characteristic indicators of misinformation:

X/Twitter: Check account age, follower counts, posting patterns. New accounts with few followers making extraordinary claims are suspicious. Blue check marks no longer indicate verification, only paid subscription.

Facebook: Beware of foreign-operated pages, especially those suddenly posting about local events. Check the “About” section for page creation date and location.

TikTok: Videos that rapidly cut between images or use dramatic music to create emotional responses often manipulate context. Look for original source attribution.

Telegram: This platform is a known incubator for conspiracy theories. Content that originates on Telegram and migrates to mainstream platforms deserves intense scrutiny.

AI Detection Tools

As AI-generated content becomes more sophisticated, specialized tools can help identify it:

AI image detectors: Services like Hive Moderation, Sensity, and Optic can analyze images for signs of AI generation, though they are not infallible.

Reverse image search: Google Images and TinEye can reveal if an image has been used in different contexts or dates back before the claimed event.

Metadata analysis: Tools can examine the technical data embedded in images and videos to verify creation dates and detect manipulation.

The Role of Legitimate Media

Professional journalism serves as a critical counterweight to misinformation, but only when properly supported and utilized by the public.

Real-Time Fact-Checking

Major news organizations deployed verification teams to combat Bondi Beach misinformation in real time. ABC NEWS Verify, AAP FactCheck, and international fact-checking networks worked to debunk false claims as they emerged.

ABC NEWS Verify tried to replicate the fake spike in Israel in the days before the attack. The result only showed a spike from about the time the gunman’s name was revealed by the ABC. This type of hands-on verification, where journalists actually test claims rather than simply reporting what others say, is essential for establishing truth.

Editorial Standards and Corrections

Legitimate news organizations have editorial processes designed to catch errors before publication and clear correction policies when mistakes occur. The 7NEWs misidentification of Ben Cohen following the 2024 Bondi Junction stabbing led to criticism and highlighted the need for rigorous verification even under deadline pressure.

These standards create delays that conspiracy theorists exploit. False narratives spread within hours while verified journalism takes time to confirm details. This creates a strategic advantage for misinformation that platforms and media organizations must address through faster, more effective verification tools and processes.

Recommendations for Stakeholders

Addressing the misinformation ecosystem requires coordinated action from multiple stakeholders.

Platform Responsibilities

Social media companies must:

Implement robust source verification for AI systems to prevent tools like Grok from amplifying false information based on unreliable or fabricated sources.

Create friction for viral misinformation through warning labels, reduced distribution, and required fact-check review before sharing particularly harmful content.

Improve AI detection and labeling so users know when images, videos, or text are AI-generated rather than authentic documentation.

Provide rapid response mechanisms for verified victims and authorities to flag false information about ongoing crises.

Increase transparency about recommendation algorithms and how content gains visibility, allowing researchers to identify and address manipulation.

Government and Regulatory Approaches

Governments should:

Strengthen voluntary codes with enforcement mechanisms rather than relying solely on platform self-regulation that has repeatedly proven insufficient.

Invest in public media literacy education to help citizens identify and resist misinformation.

Support fact-checking organizations through funding and legal protections that enable rapid response to false narratives.

Develop clear legal frameworks for AI accountability that establish responsibility when algorithmic systems amplify harmful misinformation.

Coordinate internationally on disinformation campaigns that operate across borders, as the Bondi Beach campaign clearly demonstrates.

Individual Actions

Citizens can contribute by:

Practicing information hygiene through verification before sharing, especially during breaking news situations when emotions run high.

Supporting quality journalism through subscriptions and engagement with credible news sources.

Calling out misinformation when encountered, politely correcting friends and family who share false information.

Reporting harmful content to platforms rather than simply scrolling past.

Logging off during crisis events if unable to distinguish reliable from unreliable information, rather than contributing to the noise.

Looking Forward: The Future of Information Warfare

The Bondi Beach attack and subsequent misinformation campaign offer a preview of future challenges as technology and information warfare tactics continue evolving.

Emerging Threats

Deepfake videos will soon be indistinguishable from authentic footage, allowing fabrication of convincing fake evidence of events that never occurred.

Real-time AI manipulation could alter live streams or video feeds as they are captured, creating false records of events as they unfold.

Coordinated inauthentic behavior at scale using AI to generate thousands of realistic-seeming accounts that promote false narratives, overwhelming organic discussion.

Targeted micro-disinformation using personal data to craft individualized false narratives designed to manipulate specific people rather than broad audiences.

Necessary Adaptations

Successfully navigating this landscape requires:

Technological solutions including AI systems specifically designed to detect manipulation and misinformation, blockchain-based verification of authentic media, and improved cryptographic authentication of sources.

Social adaptations through increased skepticism of unverified claims, cultural norms against sharing unverified information, and community-based fact-checking networks.

Institutional reforms creating independent bodies with authority to require platform transparency and enforcement of standards, legal frameworks that balance free expression with accountability for deliberate disinformation, and international cooperation on cross-border information operations.

Conclusion: Truth Under Siege

The Bondi terror attacks and the subsequent tsunamis of misinformation represent a critical inflection point. We face not only physical terrorism but information warfare designed to amplify trauma, sow division, and undermine social cohesion.

There is a well-established relationship between a poor information environment and a lack of social cohesion. When people cannot agree on basic facts about what occurred, constructive dialogue becomes impossible. When victims are denied the dignity of having their suffering acknowledged, healing is obstructed. When heroes are subjected to racist campaigns denying their courage, social bonds fracture.

The technical solutions are important but insufficient. Platform reforms, AI detection tools, and regulatory frameworks all have roles to play. However, the ultimate defense against misinformation is human: critical thinking, emotional regulation, commitment to truth, and willingness to prioritize accuracy over viral engagement.

Dr Amanda Watson of the Australian National University said the best thing people could do was get their information from reputable news organisations, instead of on social media or from AI. That is where people can access information that has been verified, where journalists and editors have made every effort to check facts before publication.

The victims of the Bondi attacks deserve truth. The heroes who intervened deserve recognition. The communities affected deserve accurate information to process trauma and prevent future violence. Society deserves an information ecosystem where facts matter more than engagement metrics, where truth moves faster than lies, where technology serves human flourishing rather than exploitation.

This requires each person to become an active participant in protecting information integrity: verifying before sharing, supporting quality journalism, correcting misinformation, and maintaining standards even when convenient fabrications align with existing beliefs. The war against truth is fought in millions of individual decisions about what to believe, what to share, and what to tolerate. Victory requires vigilance from everyone.

Sources and References

- Wikipedia – Bondi Junction Stabbings

- City of Sydney – Decision on Bondi Junction Attack

- Wikipedia – 2025 Bondi Beach Shooting

- Coroners Court NSW – Bondi Junction Inquest

- ABC News – Sydney Stabbing: 6 Dead

- SBS News – Women Not a Target of Bondi Junction Attack

- CNN – Bondi Junction Attacker May Have Targeted Women

- Lifeline – 2024 Bondi Junction Attack Wellbeing Support

- TIME – Bondi Beach Shooting: Everything We Know

- Washington Post – Man Kills 6 in Stabbing Attack at Sydney Mall

- RTE – How Misinformation Spread After Bondi Beach Attack

- Misbar – How Grok and Social Media Fueled Misinformation

- RNZ – Racist and Antisemitic False Information Spreads

- The New Daily – Misinformation Exploits Confusion After Bondi Attack

- The Canberra Times – Misinformation and False Identities

- SBS News – Cricket Shirts and Fake Hanson Quotes

- Jewish Onliner – Foreign Influencers Push False Flag Conspiracy Theorie